***For this article I will jump straight to code so I assume you understand most topics about ANN/DL layers (e.g. Fully-Connected Layers, Convolutional Layers, Embeddings, etc.) and know your way around Keras + tensorflow. Also, There is a pretty neat review about Multi-Task learning here that I encourage you to read .***

Deep Learning (DL) is a very flexible family of algorithms that apply artificial neural networks (ANN) to many types of classification and regression problems that has had great success surpassing human level accuracy on many specific tasks. I would like to tell you about some DL architectures that are straightforward and very usuleful.

There is a sub-field of Machine Learning (ML) that attempts to develop models that can perform many different tasks at once by sharing domain information about a specific problems. It is rightfully named Multi-task Learning (MTL).

I m going to jump right in and show the architecture and code so you can start prototyping. These architectures are not fully functional but are meant to get you going right away.

Two or more Regression Tasks

Lets say you are tasked with predicting both users Cholesterol level and weight. You are given several variables from demographic, socioeconomic, geographical and even their textual reviews from a food review app.

If you look at this problem you can see that both objective variables might share a lot of feature variables (i.e. predictors) that may belong to different knowledge domains. So by using MTL you can transfer learning from one domain to the other.

This same technique applies to two or more classification tasks, you would only change the last activation layer for either softmax (multiclass) or sigmoid (binary).

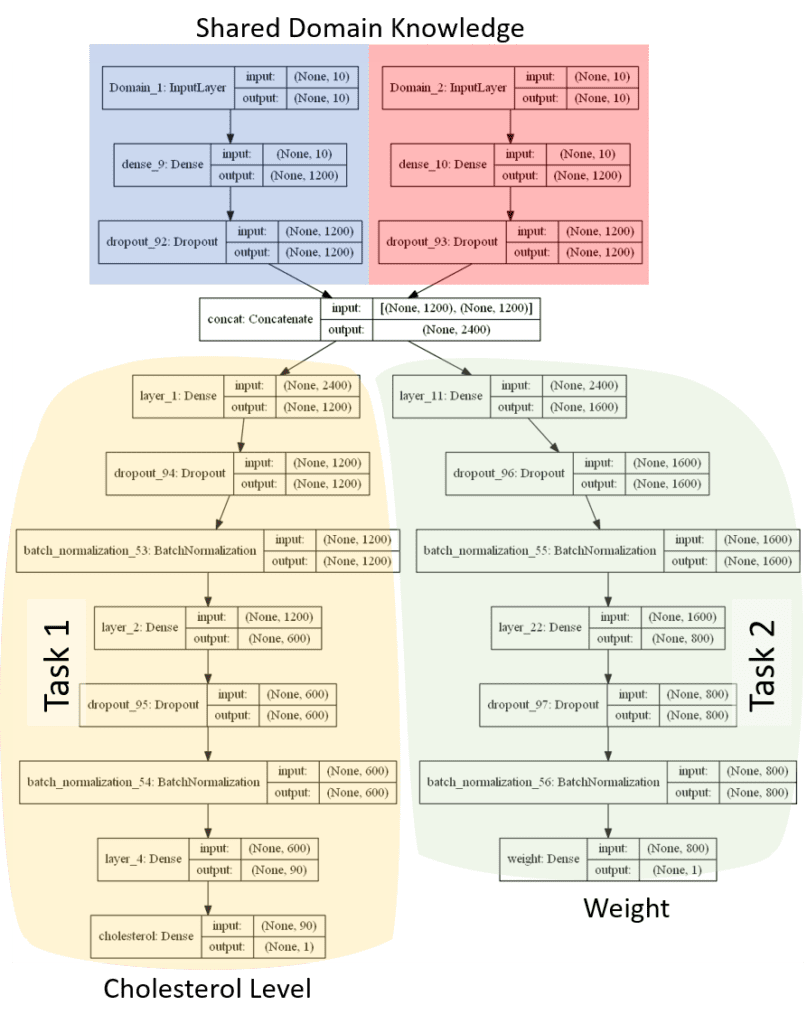

Example Architecture №1:

Two different feature domains and 2 regression tasks with Fully Connected Layers Only.

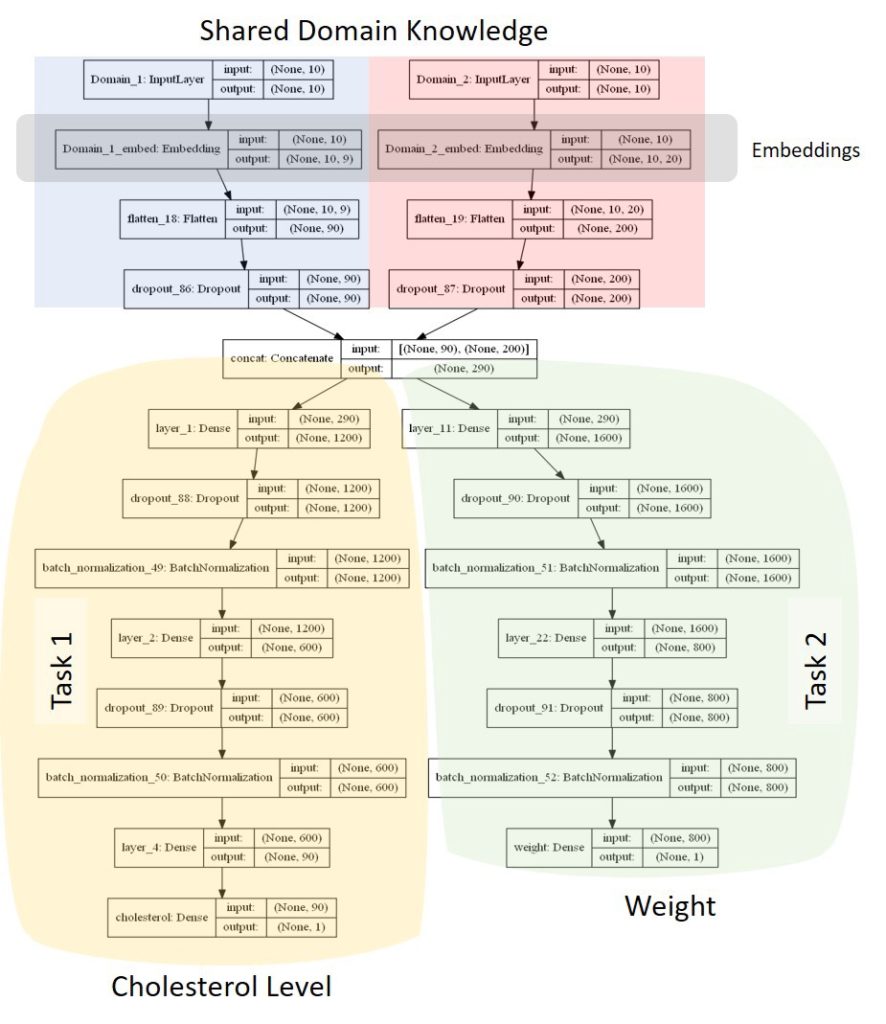

Example Architecture №2:

Two different feature domains, with domain specific embeddings. This Architecture is very powerful as it allows to apply vector embeddings to the different domains. This can be useful for further understanding of the problem such as segmentation, ranking, etc.

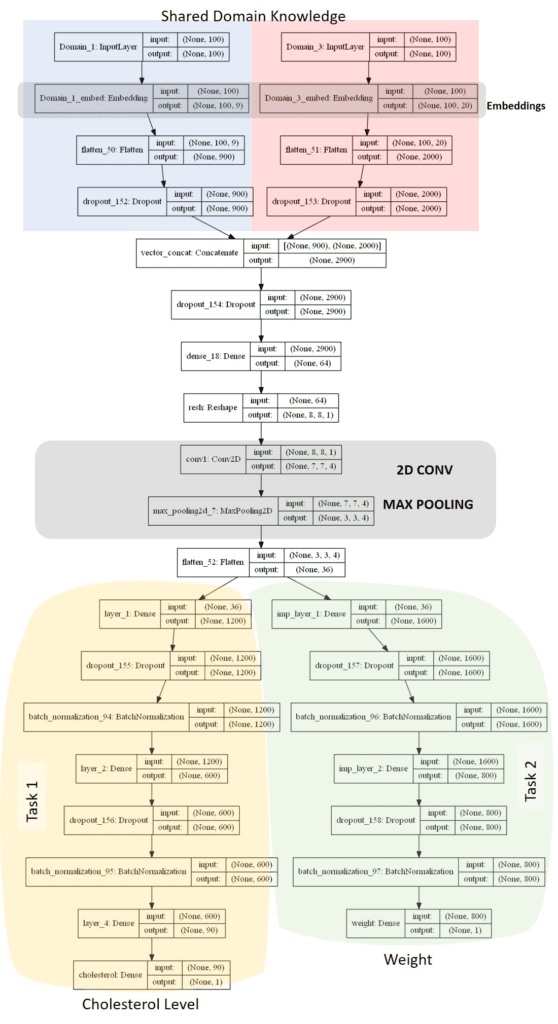

Example Architecture №3:

Now let’s say you want to apply 2d-convolutional layers with max pooling to the problem. This is actually useful when you would like to study the structure and relations among features that are learned by the convolutional filters. Or, even have a domain space with images. However, if you have only tabular data and still want to use 2d-convolutions you need to structure your problem in such a way that it can fit into the 2d-convolution paradigm.

Mixed Regression and Classification Tasks

Now Imagine you are given the task to build an algorithm that predicts both Cholesterol level and the probability that a user will fall into three categories (A) no sick no hospital, (B) sick but no hospital, or (3) sick and hospital.

As before, it may seem highly plausible that our model could be benefited by using MTL since both objective variables might share information from different domains. So, lets say we have data from medical records, demographic, socioeconomic, and geographical.

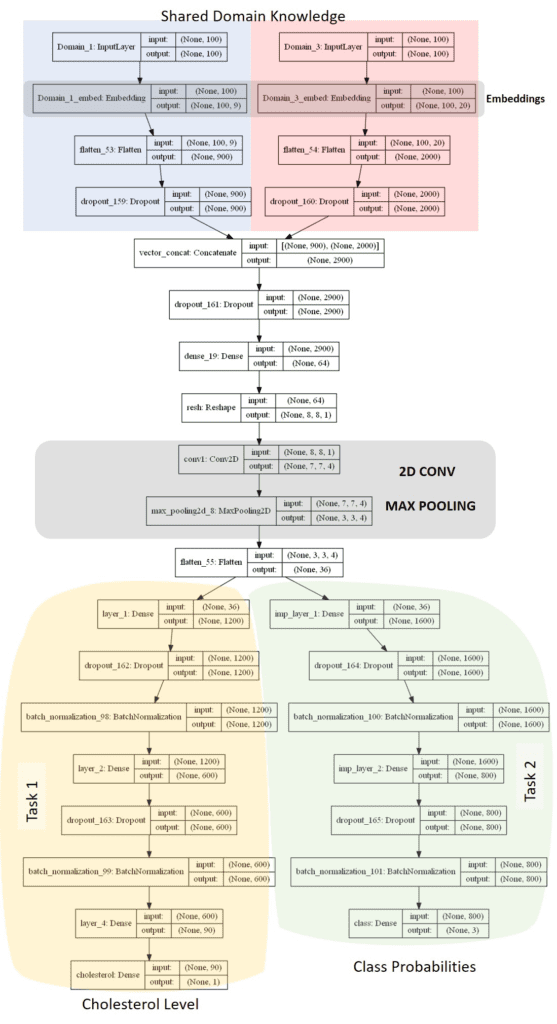

Example Architecture №1:

Two different feature domains, with individual embeddings, a 2d-convolution, with 2 tasks (one is a classification and one is a multilabel classification).

I will show you only this architecture since one you can iterate over it to better suite your needs.}

Conclusion

Hopefully this article will help you quick start prototyping functional multitask deep learning architectures if you are interested in the topic.

Cheers!